Unicode and Ruby

I gave a presentation called I18n, M17n, Unicode, And All That at the recent 2006 RubyConf in Denver. This piece doesn’t duplicate this presentation; it outlines the problem, some conference conversation, and includes a couple of images that you might want to steal and use in a future Unicode presentation. For those who don’t know, “i18n” is short for “internationalization” (i-18 letters-n), “m17n” for “multilingualization”, and you can call me “T1m”.

Background · The object of my presentation was to argue, to the Ruby community and its leadership, that I18n in general and Unicode in particular are really important technologies which deserve to be taken quite a bit more seriously than they currently are.

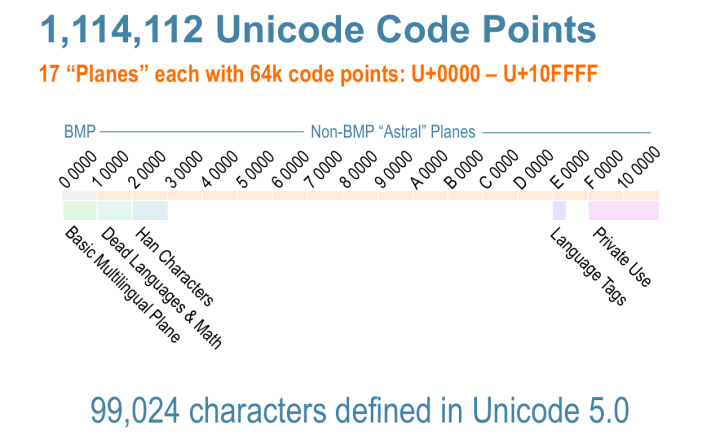

My 45 minutes included 20 or so introducing Unicode concepts and issues; I was a little worried that this might be old hat, but when I asked for a show of hands from people who thought they understood Unicode, damn few went up. So I guess this was OK. From that part of the presentation, here are a couple of pretty graphics illustrating the structure and layout of the Unicode character set.

Layout of the Unicode code-point space.

Unicode’s Basic Multilingual Pane.

The Problem · Right now, Ruby sees a String as a byte sequence, and doesn’t provide much in the way of character-oriented, as opposed to byte-oriented, functions. Also, it has very little built-in knowledge of Unicode semantics, aside from a few UTF-8 packing and unpacking capabilities, and some rudimentary regular expression support. Up till now, this hasn’t been seen as an urgent problem.

A solution has been promised in Ruby 2 (due next year, maybe); Matz calls it M17n and has made some general remarks in speeches but hasn’t published much in the way of code or documentation.

When I was preparing this talk, I sent email off to the Unicode people saying “Hey, I’m going to be plugging your baby to an eager audience of a few hundred eager programmers, want to send me some advertising?” They sent me some leaflets, but also a pre-release bound copy of the Unicode 5.0 manuscript. All the previous versions of Unicode have been impressive and beautiful books, and it looks like 5.0 will carry on the tradition.

Before my presentation, I was at the front of the room getting set and talking to Matz, who was a little worried, I think, that I’d come in raining fire and brimstone on all those who are not members of the Church of Unicode. I got the 5.0 book out and told the organizers they could raffle it off (then an idea struck) “...unless Matz wants it” and he did, so he has it now.

Some Progress · I had a talk over lunch with Matz and he made a couple of pretty good points. First, for operating on the Web, you just gotta do Unicode because it’s an inherently multilingual place and Unicode is the only plausible way to deal with texts that combine characters from lots of different languages. But for people who are using Ruby to process their own data on their own computers, compulsory Unicode round-tripping could be a real problem, because it can cause breakage. For example, take currency-symbols. In Japan, there is ambiguity in the JIS encodings as to where the ¥ (Yen) symbol goes; anyone who’s used a Japanese keyboard and seen a “¥” pop up when you hit the “\” key knows about this.

There’s another problem right here in our own round-eye gringo back yard; namely the blurring of the border between two immensely popular encodings, ISO-8859-1 and Microsoft Windows 1252. Sam Ruby’s Survival Guide to I18n (from 2004) covers these issues; also see a couple of slides starting here, Copy and Paste, and Mozilla bug 121174 comment #23.

So the problem with Ruby is, how do we make it easy and effortless for the zillions of people who really need Unicode to Just Work, while at the same time allowing others to avoid the potential round-trip breakage.

I don’t know the answer, but lots of people are looking at the problem, along with Matz. Julian Tarkhanov and Manfred Stienstra have been working on ActiveSupport::MultiByte (get it from Edge Rails), Nikolai Weibull on the character-encodings project, and the JRuby guys are trying to build Ruby on a platform that’s natively Unicode.

Comment feed for ongoing:

From: Daniel Haran (Oct 23 2006, at 13:30)

All this encoding stuff is something we lesser mortals hit upon far too often. I'm glad there's some very learned people working on it. Thanks!

[link]

From: Alper Çugun (Oct 23 2006, at 13:55)

I'm interested in this stealing thing. Anywhere we can see the whole presentation?

[link]

From: Nico (Oct 23 2006, at 13:59)

Do not forget normalization... Normalization on streams, normalization-insensitive string comparison. Normalization checking vs. actual normalization. And so on. Also, Unicode strings need to be traversable codepoint-at-a-time, grapheme-at-a-time, and grapheme-cluster-at-a-time! And GUI apps will need to be able to count glyphs, width, etc...

[link]

From: Tim Bray (Oct 23 2006, at 14:51)

A PDF of the whole talk is at http://www.tbray.org/talks/rubyconf2006.pdf

-Tim

[link]

From: Damian Cugley (Oct 23 2006, at 14:58)

Working Unicode support is one reason for using Python for web development rather than Ruby.

Actually, Unicode support is also a reason to use Python rather than C#: the .NET framework does not have the equivalent of Python’s unicodedata module [1].

[1]: http://docs.python.org/lib/module-unicodedata.html

[link]

From: Nico (Oct 23 2006, at 15:00)

This reminds me on how optimizations that Icon had for ASCII strings don't hold in a Unicode world. Icon used two-pointer-word sized "descriptors" to represent values, and much like many Lisps try to squeeze some type information into pointer words Icon does the same, particularly type and, in the case of strings, string length. Since Icon strings are not null-terminated this made the sub-string operation trivial and _fast_, and string concatenation, when you got lucky or when concatenating a new string to a new string, could go really fast too. Destructive assignments to sub-strings work, and "csets" (character sets) take up only 32 bytes. Most of this breaks in a Unicode world. Destructive assignment to sub-strings breaks - the assignment might change the length of the string being assigned to and there's no way to find all the places that refer to the larger string and replace their references to the new string. Say goodbye to representing character sets as bitmaps. An dthe sub-string operation? It can't be constant time anymore - because of combining marks you have to sub-string on grapheme clusters, or at least graphemes, else the substring operation can lose data! Oof! Ouch!

[link]

From: Aristus (Oct 23 2006, at 15:00)

Slightly outside of the "roundeye gringo backyard" is a host of countries whose characters are not included in iso-8859-1 or win-1252, either. Nor is it always possible to divine the character set or language or a particular text. Devs in the "new EU" have twice the headaches of anyone in Japan or America... though I am glad I'm not dealing with shift encodings.

[link]

From: Steven Jackson (Oct 23 2006, at 15:15)

See also "The Absolute Minimum Every Software Developer Absolutely, Positively Must Know About Unicode and Character Sets (No Excuses!)" at http://www.joelonsoftware.com/articles/Unicode.html

[link]

From: Simon Willison (Oct 23 2006, at 15:17)

Have you looked at Python's unicode support? Python has two types of strings: regular (byte) strings and unicode strings. Each has methods to encode/decode to the other. This works OK but still confuses many developers, so I think the plan is for Python 3,000 (the backwards-incompatible release planned for release in about two years) to default to unicode strings, with bytestrings as a separate type.

[link]

From: David N. Welton (Oct 23 2006, at 15:45)

Looks like the comments are fixed?

You left out Tcl in your language comparisons. It has had good unicode support for years, and most "just works" in that it's an integral part of the language, not a tacked-on data type.

[link]

From: Joel Norvell (Oct 23 2006, at 18:26)

Re: Unicode and Ruby

Hi Tim,

Thanks for yet another stimulating article!

I think you'd find it interesting and possibly instructive to look at how Mac OS X handles Unicode with their Objective-C classes: NSString, NSScanner and NSFormatter. I'd be most interested in your take on it!

http://developer.apple.com/documentation/Cocoa/Conceptual/Strings/index.html

Another possible resource for you is the "Ruby/Objective-C Bridge for Mac OS X with Cocoa." Although that's only my guess; I haven't had time to look at it yet.

http://rubycocoa.sourceforge.net/

My best,

Joel

[link]

From: Aristotle Pagaltzis (Oct 23 2006, at 20:35)

ObLanguagePlug: Perl has had solid Unicode support for quite a while.

Nico: well, that’s just a question of variable-width encodings. It doesn’t have anything to do with Unicode per se. If you need that sort of speed, trade memory for it by using UCS-4. Then every character is a single 32-bit word and you can do fast index math to locate characters without scanning strings. (I admit I’m not sure how well this plays with combining characters, though.)

However, most apps don’t need that. See the “You Look Inside?” section in http://www.tbray.org/ongoing/When/200x/2003/04/30/JavaStrings

[link]

From: Norbert Lindenberg (Oct 23 2006, at 20:50)

I don't understand Matz's argument about problems with using Unicode to process your own data on your own computer. If you know which version of Shift-JIS you're using, it's not that hard to implement character converters to and from Unicode that (a) map to Unicode code points so as to obtain correct semantics and (b) guarantee round-trip fidelity. Sun's Java runtime provides several flavors out of the box - JIS (Shift_JIS), Windows (windows-31j), Solaris (x-PCK), IBM (x-IBM943, x-IBM943C).

The real problem arises when you get text over the network that's labeled as Shift-JIS, because then you don't know which version of Shift-JIS it really is. The best way to avoid this problem is to convert from Shift-JIS to Unicode at the source, where you still know which Shift-JIS you have, and to send UTF-8 over the network.

[link]

From: Tim Bray (Oct 23 2006, at 21:35)

Aristotle: not true about Perl. I am quite competent with it, but I am unable to parse XML from a file, stuff pieces of it into MySQL, get them out again, and send them out on the web, without having characters damaged. So I crush everything into 7-bit and all non-ASCII characters are numeric character refs.

Norbert: You say "If you know which version of Shift-JIS you're using..." but they don't. They just have files on their computer that look OK on the screen, and round-tripping them to Unicode might breeak them. They might even know there are versions of JIS.

[link]

From: Norbert Lindenberg (Oct 23 2006, at 22:30)

Tim, the Java runtime knows which version of Shift-JIS is used in which OS. The Ruby runtime could know too.

[link]

From: Dominic Mitchell (Oct 23 2006, at 23:42)

Tim: Regarding Unicode in Perl: Core Perl handles Unicode pretty well, since 5.8.0. The problem mostly lies in the extension writers who inhabit CPAN. I added Unicode support to DBD::Pg a couple of years ago, and my patch for Unicode has recently gone into the latest development version of DBD::mysql (3.007_01). It's not difficult stuff to do, it's just that most authors don't even know that they have to do it because "they stick text in and it works, man". They don't even think about Unicode issues. I guess that this is poor marketing on behalf of the Perl core.

Regarding Unicode in Ruby; thanks for the presentation. It's a lovely explanation of the issues involved. And you can't go wrong explaining Unicode again. Everybody needs to know how this stuff works! Props for giving Matz the Unicode book, too. :-)

[link]

From: Aristotle Pagaltzis (Oct 23 2006, at 23:48)

Tim: last I checked, DBD::mysql was still blissfully charset-ignorant. I don’t know if that’s the culprit in your case, but I’ve written a couple of scripts that plug XML::LibXML into DBD::SQLite and I didn’t have to do anything to get all the bits to play, the lot of them Just Worked. If you’re in search of a solution, I’d be happy to advise.

[link]

From: Harry Fuecks (Oct 24 2006, at 00:40)

Would have thought that ICU (http://icu.sourceforge.net/) is a foregone conclusion for anything C/C++ based these days - surprised not to see it mentioned. It's being used for PHP6 (http://www.gravitonic.com/downloads/talks/oscon-2006/php-6-and-unicode_oscon-2006.pdf) and other people have found joy with it (e.g. http://damienkatz.net/2006/09/fabric_now_has.html)

> And they're just giving it away. IBM I take back all the bad things I said about you. You're good people.

[link]

From: Nico (Oct 24 2006, at 08:05)

Aristotle: UTF-32 let's you trivially index <i>codepoints</i>. Throw in combining marks and grapheme joiners and you're back to variable-length encoding. You might then argue that normalizing to NFC wherever you can solves the problem; I'm not sure it does.But trivial indexation is not what I'm after here -- recognition that we can't really have it anymore is what I'm after :) (that should really be a sad face smiley).

[link]

From: Nikolai Weibull (Oct 25 2006, at 01:21)

Nico: NFC doesn't solve all issues, no. It basically makes Unicode a fixed-width encoding for /western/ languages, i.e., basically the stuff covered in the ISO-8859-* encodings, but not much more.

Norbert: Whether Ruby can make an educated guess of what Shift-JIS is coming in, it's beside the point. People working with Shift-JIS simply don't want their text manhandled by Ruby. They want to deal with their text without a load of work going into converting to Unicode and then reconverting back to Shift-JIS (whichever flavor it may be).

Also, I don't think people in general want the forced conversion to Unicode. What if you're working on a Linux system, where text is assumed to be in UTF-8, but you have some data that you know is encoded as ISO-8859-1? You don't want to use Iconv to convert the string to UTF-8, you simply want everything to "just work". You want to be able to say something along the lines of File.open(path, 'r', 'ISO-8859-1') (or something equally trivial) and then work with the file in the specified encoding. That's my perception of it, anyway.

[link]

From: Norbert Lindenberg (Oct 25 2006, at 07:58)

Nikolai: People in general don't worry about these things. They use systems like Windows, Java, JavaScript, or web search every day, without thinking about the fact that these systems routinely convert all text to Unicode for internal processing. They may notice that they can use nice typography, mathematical symbols, and different languages in the same document, and it all just works, thanks to Unicode.

[link]

From: Nikolai weibull (Oct 26 2006, at 15:43)

Norbert: If people didn't worry about these things we wouldn't have anything but ASCII. And sure, if everyone used Unicode, we would have a pretty good solution to many problems. But as this isn't the case, pretending that everything is Unicode and always will be just doesn't cut it.

Actually, I don't quite get your response, now that I think about it. What I said was that people don't necessarily want all their data converted into Unicode. What you're saying is that people don't want to worry about encodings and that many systems do this. But there are two problems with that:

1. How do these systems remove the worry? I mean, given a file to read, it assumes - I'm guessing based on operating system - that the file is in a given encoding, e.g., UTF-16LE and then just blindly churns away at the data. Sure, maybe it has handling for BOMs and tries to determine if it's actually a UTF-8 file or perhaps an ASCII file. But what if it isn't in the default encoding, or what if the system guesses wrong? Users perhaps don't want to worry about encodings, but hey, you're job as a programmer is to see too it that users don't have to. And we that design libraries and languages need to make it possible for you, the programmer, to deal with this kind of problem in a flexible way. Assuming that everything will always be Unicode is n't very flexible.

2. I don't think people necessarily want their data converted into some internal representation, e.g., UTF-16 (which is by far the worst choice - Hi, surrogate pairs!) or UTF-32. I mean, what does it gain /you/, the programmer using the library or language? I mean if you want your UTF-8-encoded file converted to UTF-32 for saving into some database, or similar, that requires UTF-32-encoded text as input, go ahead and use iconv for that. If you want to scan your UTF-8-encoded file for matches of the regular expression /^print this line$/ I don't see what you gain by having the contents of that file internalized to UTF-16 before you do the scanning. To use a quote that predates Unicode: "Show me the beef". And again, you can't claim that the system should just understand that the file is UTF-8-encoded. Sure, on a Unix system, that's the natural default. And on a Windows system it's most likely going to be UTF-16LE. And defaults are great, but you have to cater for the other possibilities, e.g., basically 90-95 percent of all Japanese content, as well.

[link]

From: Makoto (Oct 26 2006, at 18:40)

Japanese XML Profile (W3C Note and JIS TR), available at

http://www.w3.org/TR/japanese-xml/, shows a number of

problems around the conversion between Japanese legacy

encodings and Unicode. Different implementations use

different conversion tables. This diversity is already

causing a lot of problems, and will continue to do so.

In particular, digital signature is hopeless.

I have a mixed feeling.

First, Japanese have only themselves to blame about such

diversity of conversion tables. This unforunate situation

could have been avoided if Japanese committees for 10646

had provided a normative conversion table. I tried, but

I was too late.

Second, now that this problem will stay forever, legacy

encodings do not provide interoperability for network

computing. But legacy encodings are used for existing

data and are still prefered by lot of users. We are

stuck.

Third, Unicode is not perfect, but is probably the

best now. We have to live with it. I can point

out problems of Unicode, but I also believe that

Japanese legacy encodings have problems and no

bright future.

[link]

From: Norbert Lindenberg (Oct 30 2006, at 23:52)

Nikolai: Most people are end users. They don't want to worry about character encodings, and they shouldn't have to. Things should just work for them.

You're right that this means that software engineers have to design software to be smart about character encodings. This can mean using different mechanisms, depending on circumstances: Use file formats that are specified to use Unicode. Use file formats that let each file specify its encoding. Look for character encoding information in the network protocol. Look for BOMs. Guess based on the operating system and the locale. Use character encoding detection algorithms. The former mechanisms are better than the later ones, but each one has its place. You may still need to provide users with a user interface, such as an encodings menu, that lets them fix the occasional errors of the software.

Conversion to Unicode generally allows a single library to handle text in all languages. Because it's a single library, it can be well-tested and optimized. If you use other encodings for internal processing, you often have to implement the same algorithms multiple times to account for the differences in character encodings, and the quality and performance, or even existence, of these implementations can vary widely.

Regular expressions are an excellent example. Say you search for "a". Since computers don't really know characters, this really means you're looking for a byte value in a sequence of bytes. For most encodings, you look for 0x61. But not all 0x61-valued bytes are "a". Some of them may be the second byte of a two-byte character in Shift-JIS, or any byte of a two-byte kanji character in ISO-2022-JP, or the "a" within an "ä" character reference for an "ä" that couldn't be represented directly in the encoding. To know which 0x61 bytes really are "a"s, the regular expression implementation needs to have detailed knowledge about the character encoding. But that's not all. You may want to use case-insensitive search. For that, the implementation needs to know the case mappings of all characters in each character encoding, as byte sequences of course. And you may want to search for character classes, such as letters. For that, the implementation needs to know all letters in each character encoding, as byte sequences of course. There are numerous processes that need to know character properties. Having all these processes have to know about the properties and byte representation of characters in hundreds of character encodings adds needless complexity. And what do you do if your pattern is given in an EBCDIC encoding, but the target text in which you search is given in GB 2312? How do you know that the 0x81 in the pattern should match some (but not all) of the 0x61 bytes in the target string?

Using Unicode means that all this detailed knowledge is needed only for one encoding, and that for all other encodings only the mapping to and from Unicode needs to be known.

Software design has to acknowledge that currently a lot of text is stored in non-Unicode encodings. But that doesn't mean that the internal processing of new platforms such as Ruby needs to be held hostage by legacy encodings.

[link]