Suppose we like the idea of going serverless (we do). What should we worry about when we make that bet? What I hear when I talk to people thinking about it, mostly, is latency. Can a run-on-demand function respond as quickly as a warmed-up Web server sitting there in memory waiting for incoming work? The answer, unsurprisingly, is “it depends”.

[This is part of the Serverlessness series.]

What we talk about when we talk about latency · First of all, in this context, latency conversations are almost all about compute latency; in the AWS context, that means Lambda functions and Fargate containers. For serverless messaging services like SQS and databases like DynamoDB, the answer is generally “fast enough to not worry about”.

There’s this anti-pattern that still happens sometimes: I’m talking to someone about this subject, and they say “I have a hard latency requirement of 120ms”. (For those who aren’t in this culture, “ms” stands for milliseconds and is the common currency of latency discussions. So in this case, a little over a tenth of a second.)

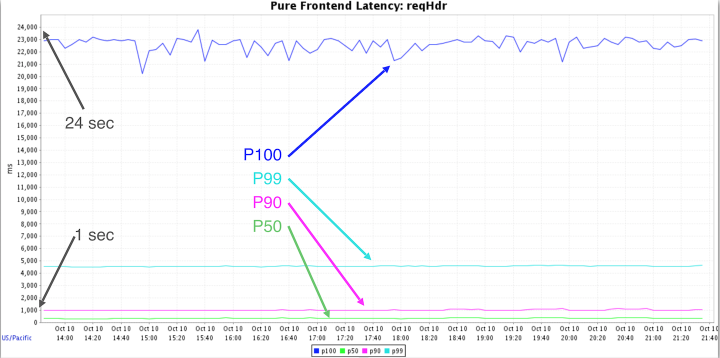

Inside AWS, a claim like that would be met with a blank stare, because latency is way, way more than just a number. To steal from an old joke: Latency is more complicated than you think, even when you think it’s more complicated than you think. Let’s start with a graph:

To start with, nobody should ever talk about latency without a P-number. P50 means a time such that latency is less than that 50% of the time, P90 such that latency is less 90% of the time, and so on into P99, P99.9; and then P100 is the longest latency observed in some measurement interval.

Looking at that graph, you can see that half the queries completed in about a quarter of a second, 90% in under a second, 99% in under five seconds, and there were a few trailers out there in twenty-something second territory. (If you’re wondering, this is a real graph of one of the microservices inside EC2 Auto Scaling, some control-plane call. The variation is because most Auto Scaling Groups have a single-digit handful of instances in them, but some have hundreds and a very few have tens of thousands.)

Now, let’s make it more complicated.

Running hot and cold · The time it takes to launch a function depends on how recently you’ve launched the function. Because if you’ve run it reasonably recently, we’ve probably got it loaded on a host and ready to go, it’s just a matter of routing the event to the right place. If not, we have to go find an empty host, find your function in storage, pull it out, and install it before we fire it up. The latter scenario is referred to as a “Cold Start”, and with any luck will only show up at some high P-number, like P90 or higher. The latency difference can be surprising.

It turns out that there are a variety of tricks you can use to remediate cold-start effects; ask your favorite search engine. And that’s all I’m going to say on the subject, because while the techniques work, they’re annoying and it’s also annoying that people have to use them; this is a problem that we need to just do away with.

Photo: Ryan Mahle from Sherman Oaks, CA, USA - Flickr.com

Polyglot latency · Once the triggering event is routed to your function, your function gets to start computing. Unfortunately, that doesn’t mean it always starts doing useful work right away; and that depends on the language it’s written in. If it’s a NodeJS or Python program, it might have to load and compile some source code. If it’s Java or .NET, it may have to get a VM running. If it’s Go or C++ or Rust, you drop straight into binary code.

And because this is latency, it’s even more complicated than that. Because some of the language runtime initialization happens only on cold starts and some even on warm starts.

It’s worth saying a few words about Java here. There is no computer language that, for practical purposes, runs usefully faster on general-purpose server-side code than Java. That is to say, Java after your program is all initialized and the VM warmed up. There has been a more-or-less conscious culture, stretching back over the decades of Java’s life, of buying runtime performance and being willing to sacrifice startup performance.

And of course it’s not all Java’s fault; a lot of app code starts up slow because of massive dependency-injection frameworks like Spring and Guice; these tend to prioritize flurries of calls through the Java reflection APIs over handling that first request. Now, Java needn’t have sluggish startup; if you must have dependency injection, check out Dagger, which tries to do it at compile time.

The take-away, though, is that mainstream Java is slow to start and you need to do extra work to get around that. My reaction is “Maybe don’t use Java then.” There are multiple other runtimes whose cold-start behavior doesn’t feature those ugly P90 numbers. One example would be NodeJS, and you could use that but I wouldn’t, because I have no patience for the NPM dependency labyrinth and also don’t like JavaScript. Another would be Python, which is not only a decent choice but almost compulsory if you’re in Scientific Computing or Machine Learning.

But my personal favorite choice for serverless compute is the Go programming language. It’s got great, clean, fast, tooling, it produces static binaries, it’s got superb concurrency primitives that make it easy to avoid the kind of race conditions that plague anyone who goes near java.lang.Thread, and finally, it is exceedingly readable, a criterion that weighs more heavily with me as each year passes. Plus the Go Lambda runtime is freaking excellent.

State hydration · It’s easy to think about startup latency problems as part of the infrastructure, whether it’s the service or the runtime, but lots of times, latency problems are right there in your own code. It’s not hard to see why; services like Lambda are built around stateless functions, but sometimes, when an event arrives at the front door, you need some state to deal with it. I call this process “state hydration”.

Here’s an extreme example of that: A startup I was talking to that had a growing customer base and also growing AWS bills. Their load was super peaky and they were (reasonably) grumpy about paying for computers to not do anything. I said “Serverless?” and they said “Yeah, no, not going to happen” and I said “Why not?” and they said “Drupal”. Drupal is a PHP-based Web framework that probably still drives a substantial portion of the Internet, but it’s very database-centric, and this particular app needed to run like eight PostgreSQL queries to recover enough context to do any useful work. So a Lambda function wasn’t really an option.

Here’s an extreme example of the opposite, that I presented in a session at re:Invent 2017. Thomson Reuters is a well-known news organization that has to deal with loads of incoming videos; the process includes transcoding and reformatting. This tends to be linear in the size of the video with a multiplier not far off 1, so a half-hour video clip could take a half-hour to process.

They came up with this ultra-clever scheme where they used FFmpeg to chop the video up into half-second-ish segments, then threw them into an S3 bucket which they’d set up to fire a Lambda for each new object. Those Lambdas processed the segments in parallel, FFmpeg glued them back together, and all of a sudden they were processing a half-hour video in a handful of seconds. State hydration? No such thing, the only thing the Lambda needed to know was the S3 object name.

Another nice thing about the serverless approach here is that doing this in the traditional style would have required staging a big enough fleet, which (since this is a news publisher) would have meant predicting when news would happen, and how telegenic it would be. Which would obviously be impossible. So this app has SERVERLESS written on it in letters of fire 500 meters high.

Database or not · The conventional approach to state hydration is to load your context out of a database. And that’s not necessarily terrible, it doesn’t mean you have to get stuck in a corner like those Drupal-dependent people. For example:

You could use something like Redis or Memcache (maybe via Elasticache); those things are fast.

You could use a key/value optimized NoSQL database like DynamoDB or Cassandra or Mongo.

You could use something that supports GraphQL (like AppSync), a protocol specifically designed to turn a flurry of RESTful fetches into a single optimized HTTP round trip.

You could package up your events with a whole lot more context so that the code processing them doesn’t have to do much work to get its bearings. The SQS-to-Lambda capability we announced earlier this year is getting a whole lot of use, and I bet most of those reader functions start up pretty damn quick.

Latency and affinity · There’s been this widely-held belief for years that the only way to get good latency in handling events or requests is to have state in memory. Thus we have things like session affinity and “sticky sessions” in conventional Web-facing apps, where you try to route strongly-related queries to the same server in a load-balanced fleet.

This can help with latency (we’ve used it in AWS services), but comes with its own set of problems. Most obviously, what happens when you lose that host, either because it fails or because you need to bounce it to patch the OS? First, you have to notice that it’s gone (harder than you’d think to do reliably), then you have to adjust the affinity routing, then you have to refresh the context in the replacement server. And what happens when you lose a whole Availability Zone, say a third of your fleet?

If you can possibly figure out a way to do state hydration fast, then you don’t have to have those session affinity struggles; just spray your requests or events across your fleet, trying to stress all the hosts evenly (still nontrivial, but tractable) and have a much simpler management task.

And once you’ve done that, you can probably just go serverless, let Lambda handle smoothing out the load, and don’t write any code that isn’t pure value-adding application logic.

How to talk about it · To start with, don’t just say “I need 120ms.” Try something more like “This has to be in Python, the data’s in Cassandra, and I need the P50 down under a fifth of a second, except I can tolerate 5-second latency if it doesn’t happen more than once an hour.” And in most mainstream applications, you should be able to get there with serverless. If you plan for it.

Comment feed for ongoing:

From: eerie quark doll (Dec 15 2018, at 11:40)

Nice article.

[link]

From: Hugh (Dec 15 2018, at 16:36)

Thank you for this series - a great short introduction to why it's worth considering.

[link]

From: Ken Kennedy (Dec 16 2018, at 09:52)

Thanks for the article (and the whole series), Tim! This stuff is really interesting; I'm a DBA by trade, and do a hunk of dev work on the side...so I'm definitely by default in the "keep your state in the database" camp. These sorts of articles really help me wrap my head around the process changes needed for successful serverless.

[link]

From: Efi (Dec 16 2018, at 13:35)

Hey Tim,

Thank you for writing about this topic. Actually in our testing, we've found out that code initialization has more effect on your cold start than the other points.

Here is a nice summary of our results: https://medium.com/lumigo/web-frameworks-implication-on-serverless-cold-start-18ee5eb6c62a

[link]

From: Gavin B (Dec 19 2018, at 23:57)

Here's a TheRegister perspective - subheaded:

"If 2019 is the year you try AWS Lambda et al, then here are pitfalls to look out for"

https://www.theregister.co.uk/2018/12/19/serverless_computing_study/

[link]