Modeling Is Hard

In A post-mortem on the previous IT management revolution William Vambenepe writes, on the subject of standardization: “The first lesson is that protocols are easy and models are hard.” I agree about the relative difficulty, but think when it comes to interoperation, protocols are very difficult and shared models usually impossible. A couple of examples occur to me.

[This is the third time recently that I’ve referred to Mr. Vambenepe; I only ran across him when I wandered into this dodgy Cloud neighborhood. Given current circumstances I suspect it’s illegal for us to converse, but if things end well I’ll have to buy him a beer.]

We are re-visiting a theme here: I have long believed and repeatedly written that bits on the wire are the only serious reliable basis for interoperability, and worried in public about the feasibility of shared models. I believe the existence and success of the Internet and the Web are strong evidence in my favor. They have no object models or APIs; nor could they: they are just a collection of agreements what bits we send each other, with accompanying narrative about what those bits are supposed to mean.

Case Study: Atom and/or JSON · Recently I’ve seen a few instances of protocols specified as allowing messages in either XML or JSON; this included drafts of the Sun Cloud APIs before I got involved.

I think the reason this happens is that popular programming frameworks including JAX-B and Rails make it easy to generate either on demand; “Why,” the protocol designer asks, “shouldn’t we provide both? It’s free!”.

Well, it’s free for you. But you’ve potentially doubled the work for someone implementing the other side of the protocol, who must be prepared to deal with either data format. So don’t do that.

The second problem is subtler and more serious: since there are two data formats and you don’t want to describe both of them at the bit-pattern level, you have to invent an abstract model for the protocol messages and define two mappings, one to each payload format.

This extra work adds zero value to the protocol, and introduces several opportunities for error. And it really only works well if your data structure is a relatively flat, frozen set of fields without much narrative. Which means that you don’t need XML anyhow. And if you do need XML, attempts to model it in JSON quickly spiral into sickening ugliness and complexity.

So don’t do that.

Case Study: Modeling a Number ·

What’s the simplest possible protocol element? I’d suggest it’s a single

number.

Suppose we are designing a simple XML protocol, and that protocol includes a

field named registered which is used to communicate the

number of students registered for a course. Here is its specification:

The content of the

registeredelement gives the number of students registered in this class, updated at 5PM each Friday. The offering faculty’s current rules governing when in the semester courses may be added or dropped must be consulted to predict the possible changes in the value from week to week.This value could in principle be computed by retrieving the course roster and is included in this message to simplify certain operations which are frequently performed with respect to courses.

I like this description; it’s very specific as to the application semantics and what you have to watch out for, and even provides some justification as to why the field exists. So, is that enough specification? Is my protocol-design work done?

At this point, some readers will be shifting nervously in their seats, wondering whether I shouldn’t offer some syntax guidance. Well, that’s not rocket science; let’s add a paragraph to the specification:

The content of

registeredMUST match the regular expression[0-9]+.

I’m not actually sure we’ve just added value to the protocol. On the one hand, we’ve disallowed empty elements and fractional students. On the other, suppose the library that generated the XML sent you this:

<registered>

+18

</registered>There’s been a pretty-printer at work here and a superfluous

“+”. Should this be thrown on the floor? I’m not a

Postel’s-Law absolutist, but tossing this does feel a bit ungenerous. If you

went with just the first version, it might encourage implementors to be

intelligently defensive about their number parsing. Is that bad?

I’ll tell you this: As a TDD disciple, if I read the initial spec language, I’d have cooked up a bunch of test cases that would have explored the value space pretty thoroughly before I shipped any code. Tighten the spec and I might have gotten lazy.

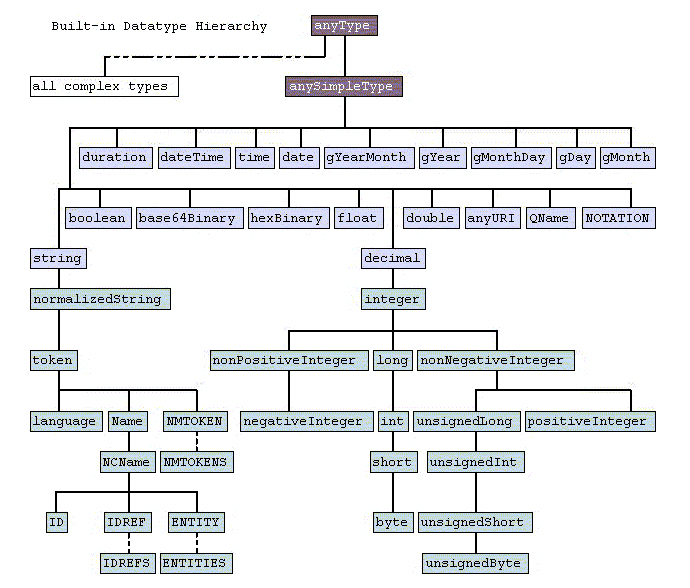

Still, a serious data-modeling fan might argue that I’m not going far enough. For maximum interoperability, data should be typed, right? Fortunately for our fan, XML Schema provides a rich repertory of primitive types. Here’s a picture, from XML Schema Part 2: Datatypes Second Edition:

Now let us consider what kind of a thing we have here; it’s probably safe

to rule out base64Binary, hexBinary,

float, and double.

That leaves decimal, or rather its subtype

integer. But what kind? Well, it’s just a bunch of people in a

class, so let’s use unsignedByte and not waste memory. Only

Java doesn’t do that and, oops, consider those big undergrad

“Whatever 101” courses. OK, let’s say unsignedShort and hope

this never gets used for one of those online self-improvement courses by

Oprah and Eckhart Tolle. So maybe just nonNegativeInteger, oops

I mean positiveInteger, because the class can’t be empty. Can

it?

Then go have some fun reading the “lexical space” constraints that come with each of these types. I really hope that your XSD-checking library is compatible with the one at the other end of the wire.

Enough already; it seems obvious to me that in the vast majority of scenarios, we would be investing substantial effort but not really adding any value in the process.

At this point I should acknowledge that to some extent I’m whacking at a straw-man here. But I do believe the following things about designing protocols:

You can’t provide too much English prose about what the data means and how it relates to the real world.

You may add value by constraining syntax beyond what’s implied by the specification prose. But not always; you may introduce complexity and rigidity without corresponding benefit.

Trying to get implementors at both ends of a protocol to agree on a data model is at best difficult and requires many decisions, each of which is easy to get wrong and each of which may introduce unnecessary brittleness.

Comment feed for ongoing:

From: Francis Hwang (Apr 30 2009, at 04:43)

Tim, have you ever read Jaron Lanier's essay "Why Gordian software has convinced me to believe in the reality of cats and apples"? In it he discusses certain recent development in robotics that work on less on developing a robust Platonic model of the world, and more on pattern recognition. I find myself referring to his emphasis on "surfaces" all the time when thinking of standards, or even of the nature of dynamic typing.

http://www.edge.org/3rd_culture/lanier03/lanier_index.html

"When you de-emphasize protocols and pay attention to patterns on surfaces, you enter into a world of approximation rather than perfection. With protocols you tend to be drawn into all-or-nothing high wire acts of perfect adherence in at least some aspects of your design. Pattern recognition, in contrast, assumes the constant minor presence of errors and doesn't mind them. My hypothesis is that this trade-off is what primarily leads to the quality I always like to call brittleness in existing computer software, which means that it breaks before it bends."

(He uses the word "protocol" here differently than you do, but I think the similarity in argument is strong regardless.)

[link]

From: Ed Davies (Apr 30 2009, at 04:47)

So, do you think it's a good thing or a bad thing that the XML Infoset, the XPath tree model and the DOM are similar but subtly different? In other words, would there have been a benefit to publishing the model along with the XML syntax?

[link]

From: Martin Probst (Apr 30 2009, at 05:51)

There is no question that the XML Schema type system is weird. The data types are actually the harmless part, the derivation/substitution/extension/restriction rules for complex types (i.e. actual elements) are much, much worse, as you probably know.

Non the less, I think that there is some value in giving a definition of what a "number" in this context should be. Even if it's just in plain text, i.e. "an integer number", or "a positive integer number". You give both producers and consumers an indication of what a legal value should be, without them having to deduce that from the semantic meaning of the field (which can lead to very different interpretations).

It's just too easy to miss special cases in programming interfaces, even with simple things like numbers. I think more precise language prods people to think about these edge conditions.

[link]

From: John Cowan (Apr 30 2009, at 07:47)

If the protocol is a negotiated one, I agree that having both XML and JSON is probably pointless. But if it's dictated by the server side (who presumably is able to put in the extra implementation effort), it may be worthwhile to provide both protocol types using a common data model. Some users are better set up to communicate in XML, some in JSON. It's kind of like the right to talk to the Government of Canada in either English or French and get a reply in the same language. :-)

[link]

From: Peter Keane (Apr 30 2009, at 08:19)

On the Atom & JSON trend: I think this is largely due to the fact that parsing XML w/ JavaScript is no fun at all (and parsing JSON is a breeze). If your API will be consumed by a browser (at this time, generally same domain) JSON is almost a must. Yet I'd say XML offers all the right ingredients to formulate an "application-level protocol for publishing and editing Web Resources" (AtomPub).

I'm unwilling to NOT offer AtomPub as write-back protocol. Our solution is based on the fact that it's actually easy to *create* an Atom Entry in JavaScript. So...we provide both Atom and JSON out, but only accept Atom for write back. Not sure if that seems crazy or not, but it's worked our well for us.

[link]

From: Rick Jelliffe (Apr 30 2009, at 09:21)

Just for what it is worth:

<schema

xmlns="http://purl.oclc.org/dsdl/schematron">

<title>Single number protocol</title>

For example

<span class="code">

<registered>18</registered>

</span> or

<span class="code">

<registered>+18</registered>

</span

<pattern>

<rule context="/">

<assert test="registered">

The protocol expects a single element called registered.

</assert>

</rule>

<rule context="registered">

<assert test="number(.) or number(string-after(., '+'))">

The number of students registered should use simple digits.

</assert>

<report test="contains(., '+')"

role="warning">

Numbers may start with a +, however they will need rework later so + is best avoided.

</report>

<report test="*" >

There are no other elements allowed except the registered element.

</report>

</rule>

</pattern>

</schema>

[link]

From: jgraham (May 01 2009, at 06:43)

I'm afraid I'm going to have to disagree with the value of rigorous specifications. For example, from your description of the registered element, I have no idea which of the following are supposed to work, and what each of them is supposed to represent:

<registered></registered>

<registered>10</registered>

<registered> 10</registered>

<registered> 10 </registered>

<registered>0x10</registered>

<registered>010</registered>

<registered>10.0</registered>

<registered>10.5</registered>

<registered>A10</registered>

<registered>10junk</registered>

<registered>ten</registered>

<registered><value>10</value></registered>

<registered>++10</registered>

So given your spec text and ten different implementations of the format, I would expect ten different interpretations of how to handle incoming documents. Each implementation may have any number of tests to ensure that they behave according to their interpretation of the spec but, without significant effort reverse engineering the competition those tests won't have any value in ensuring interoperability. Add in more prose about the expected real-world situations that the spec is modeling doesn't really help here; you still don't know if the value 0x10 means 0, 10, 16 or throw an error.

That said, I quite agree with your next point, that adding authoring requirements to the spec is of very little value. If you are lucky and the people producing your content are diligent then it means that you might not hit edge cases so often. It still doesn't tell you what to do when you do hit them so authoring requirements often do little for interoperability (they do have a value for authors of course).

The right solution to this problem is to take the HTML5 path and write the parsing rules as part of the specification. For example, in this case you might write an algorithm like:

1. Let value be the empty string

2. Let k be 0

3. Let text be the concatenation of all text node descendants of the <registered> element in tree order

4. If len(text) is 0 the return undefined

5. Let c be the k-th character of text

6. While k < len(text):

6.1 If c is a whitespace character or + increase k by one

7. If k == len(text) return undefined

8. While k< len(text):

8.1 If c is a decimal digit, append c to value

9. If value is the empty string return undefined

10. Otherwise return value interpreted as a base-10 integer

(this of of course assumes that you have already defined the meaning of things like the len() function and the undefined value that indicates an error). The specification would then have a MUST-level requirement for behavior that is indistinguishable from that of the algorithm. Given such an algorithm, it is trivial to deduce what the correct behavior is supposed to be and it is trivial for implementers to write tests that verify that they are meeting the specification. Importantly, it also encourages the people writing the specification to consider what mistakes authors could make, thus increasing the chance that the format is robust against those mistakes.

Of course nothing in the above constrains what the actual internal representation should be. It is, after all, only a black box requirement that you act as if the above algorithm were operating. If a difference isn't something you can test, it's not something that can cause an interoperability problem and so it should be left free in the spec. You could have the issue that large values will exceed the value that some implementations would like to use for their underlying representation; this could be fixed by specifying that behavior is only well defined up to some large context-dependent limit (e.g. a 32 bit unsigned integer would be enough space for 2/3 of the world's population to sign up to your course) or even by truncating larger values of the limit even when implementations could cope with larger numbers.

I don't deny that this is harder for specification writers than just hoping implementations sort themselves out. But for a successful format with a diverse end-user community it is easier for both authors and also implementers, since they will be freed from the need to spend most of their time reverse-engineering their competitors.

[link]

From: Faui Gerzigerk (May 03 2009, at 04:39)

jgraham, if you define numbers as procedural parsers, aren't you just shifting the whole problem towards interpreting an ill defined non-existent programming languge?

[link]

From: jgraham (May 05 2009, at 01:29)

> if you define numbers as procedural parsers, aren't you just shifting the whole problem towards interpreting an ill defined non-existent programming languge?

Well I'm not really defining numbers, I'm defining how to extract a number from an XML node (or, if you accept that XML parsing is well defined, from a stream of bytes).

Your description, of course, makes it sound like a hopeless approach but in practice it turns out to work OK. Humans are rather good at following explicit sequences of instructions (compared to their ability to infer solutions from constraints) and the language, whilst undoubtedly never achieving the preciseness that would be desired by Russell and Whitehead, can be tight enough to produce interoperable implementations. At least that is my experience from working on implementations of HTML 5 and ECMAScript, both of which use this kind of style in their specifications.

[link]