My input stream is full of it: Fear and loathing and cheerleading and prognosticating on what generative AI means and whether it’s Good or Bad and what we should be doing. All the channels: Blogs and peer-reviewed papers and social-media posts and business-news stories. So there’s lots of AI angst out there, but this is mine. I think the following is a bit unique because it focuses on cost, working backward from there. As for the genAI tech itself, I guess I’m a moderate; there is a there there, it’s not all slop. But first…

The rent is too damn high · I promise I’ll talk about genAI applications but let’s start with money. Lots of money, big numbers! For example, venture-cap startup money pouring into AI, which as of now apparently adds up to $306 billion. And that’s just startups; Among the giants, Google alone apparently plans $75B in capital expenditure on AI infrastructure, and they represent maybe a quarter at most of cloud capex. You think those are big numbers? McKinsey offers The cost of compute: A $7 trillion race to scale data centers.

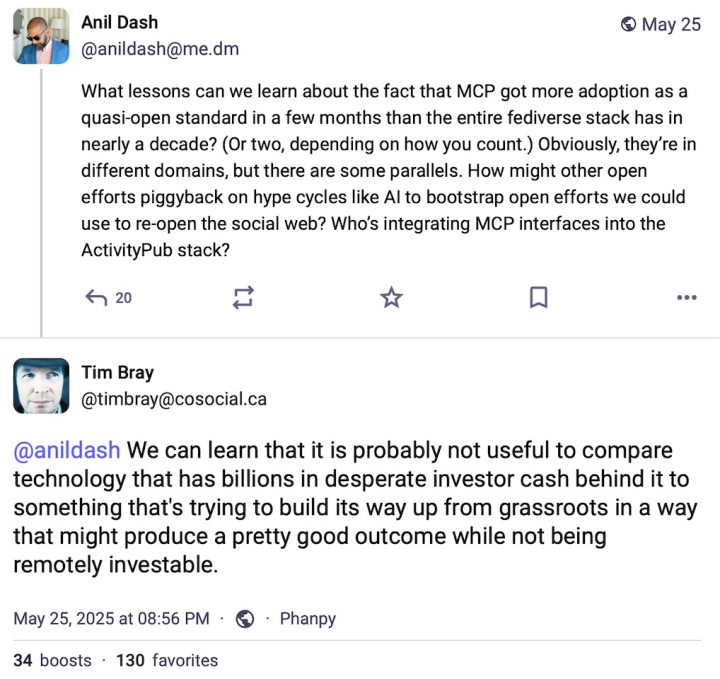

Obviously, lots of people are wondering when and where the revenue will be to pay for it all. There’s one thing we know for sure: The pro-genAI voices are fueled by hundreds of billions of dollars worth of fear and desire; fear that it’ll never pay off and desire for a piece of the money. Can you begin to imagine the pressure for revenue that investors and executives and middle managers are under?

Here’s an example of the kind of debate that ensues.

“MCP” is

Model Context Protocol, used for communicating between LLM

software and other systems and services.

I have no opinion as to its quality or utility.

I suggest that when you’re getting a pitch for genAI technology, you should have that greed and fear in the back of your mind. Or maybe at the front.

And that’s just the money · For some reason, I don’t hear much any more about the environmental cost of genAI, the gigatons of carbon pouring out of the system, imperilling my children’s future. Let’s please not ignore that; let’s read things like Data Center Energy Needs Could Upend Power Grids and Threaten the Climate and let’s make sure every freaking conversation about genAI acknowledges this grievous cost.

Now let’s look at a few sectors where genAI is said to be a big deal: Coding, teaching, and professional communication. To keep things balanced, I’ll start in a space where I have kind things to say.

Coding · Wow, is my tribe ever melting down. The pro- and anti-genAI factions are hurling polemical thunderbolts at each other, and I mean extra hot and pointy ones. For example, here are 5600 words entitled I Think I’m Done Thinking About genAI For Now. Well-written words, too.

But, while I have a lot of sympathy for the contras and am sickened by some of the promoters, at the moment I’m mostly in tune with Thomas Ptacek’s My AI Skeptic Friends Are All Nuts. It’s long and (fortunately) well-written and I (mostly) find it hard to disagree with.

it’s as simple as this: I keep hearing talented programmers whose integrity I trust tell me “Yeah, LLMs are helping me get shit done.” The probability that they’re all lying or being fooled seems very low.

Just to be clear, I note an absence of concern for cost and carbon in these conversations. Which is unacceptable. But let’s move on.

It’s worth noting that I learned two useful things from Ptacek’s essay that I hadn’t really understood. First, the “agentic” architecture of programming tools: You ask the agent to create code and it asks the LLM, which will sometimes hallucinate; the agent will observe that it doesn’t compile or makes all the unit tests fail, discards it, and re-prompts. If it takes the agent module 25 prompts to generate code that while imperfect is at least correct, who cares?

Second lesson, and to be fair this is just anecdata: It feels like the Go programming language is especially well-suited to LLM-driven automation. It’s small, has a large standard library, and a culture that has strong shared idioms for doing almost anything. Anyhow, we’ll find out if this early impression stands up to longer and wider industry experience.

Turning our attention back to cost, let’s assume that eventually all or most developers become somewhat LLM-assisted. Are there enough of them, and will they pay enough, to cover all that investment? Especially given that models that are both open-source and excellent are certain to proliferate? Seems dubious.

Suppose that, as Ptacek suggests, LLMs/agents allow us to automate the tedious low-intellectual-effort parts of our job. Should we be concerned about how junior developers learn to get past that “easy stuff” and on the way to senior skills? That seems a very good question, so…

Learning · Quite likely you’ve already seen Jason Koebler’s Teachers Are Not OK, a frankly horrifying survey of genAI’s impact on secondary and tertiary education. It is a tale of unrelieved grief and pain and wreckage. Since genAI isn’t going to go away and students aren’t going to stop being lazy, it seems like we’re going to re-invent the way people teach and learn.

The stories of students furiously deploying genAI to avoid the effort of actually, you know, learning, are sad. Even sadder are those of genAI-crazed administrators leaning on faculty to become more efficient and “businesslike” by using it.

I really don’t think there’s a coherent pro-genAI case to be made in the education context.

Professional communication · If you want to use LLMs to automate communication with your family or friends or lovers, there’s nothing I can say that will help you. So let’s restrict this to conversation and reporting around work and private projects and voluntarism and so on.

I’m pretty sure this is where the people who think they’re going to make big money with AI think it’s going to come from. If you’re interested in that thinking, here’s a sample; a slide deck by a Keith Riegert for the book-publishing business which, granted, is a bit stagnant and a whole lot overconcentrated these days. I suspect scrolling through it will produce a strong emotional reaction for quite a few readers here. It’s also useful in that it talks specifically about costs.

That is for corporate-branded output. What about personal or internal professional communication; by which I mean emails and sales reports and committee drafts and project pitches and so on? I’m pretty negative about this. If your email or pitch doc or whatever needs to be summarized, or if it has the colorless affectless error-prone polish of 2025’s LLMs, I would probably discard it unread. I already found the switch to turn off Gmail’s attempts to summarize my emails.

What’s the genAI world’s equivalent of “Tl;dr”? I’m thinking “TA;dr” (A for AI) or “Tg;dr” (g for genAI) or just “LLM:dr”.

And this vision of everyone using genAI to amplify their output and everyone else using it to summarize and filter their input feels simply perverse.

Here’s what I think is an important finding, ably summarized by Jeff Atwood:

Seriously, since LLMs by design emit streams that are optimized for plausibility and for harmony with the model’s training base, in an AI-centric world there’s a powerful incentive to say things that are implausible, that are out of tune, that are, bluntly, weird. So there’s one upside.

And let’s go back to cost. Are the prices in Riegert’s slide deck going to pay for trillions in capex? Another example: My family has a Google workplace account, and the price just went up from $6/user/month to $7. The announcement from Google emphasized that this was related to the added value provided by Gemini. Is $1/user/month gonna make this tech make business sense?

What I can and can’t buy · I can sorta buy the premise that there are genAI productivity boosts to be had in the code space and maybe some other specialized domains. I can’t buy for a second that genAI is anything but toxic for anything education-related. On the business-communications side, it’s damn well gonna be tried because billions of dollars and many management careers depend on it paying off. We’ll see but I’m skeptical.

On the money side? I don’t see how the math and the capex work. And all the time, I think about the carbon that’s poisoning the planet my children have to live on.

I think that the best we can hope for is the eventual financial meltdown leaving a few useful islands of things that are actually useful at prices that make sense.

And in a decade or so, I can see business-section stories about all the big data center shells that were never filled in, standing there empty, looking for another use. It’s gonna be tough, what can you do with buildings that have no windows?

Comment feed for ongoing:

From: Tom Passin (Jun 08 2025, at 20:07)

There's an inherent tension with current LLMs. To make effective use of them you have to apply your critical thinking skills for all they are worth. But for the lazy, and especially students who make heavy use of them, they won't be able to develop those skills. So pretty soon most people using LLMs won't have the skills to use them safely and effectively.

[link]

From: Marc (Jun 08 2025, at 20:45)

I just retired after developing networking, graphics, and simulation software for 50+ years. I was curious as to whether my prior skepticism about generative AI was warranted, so I've spent the past couple of months poking around.

Big AI is what we hear about in the tech media. This is all about personalities, valuation, monopolization, and throwing money at buying GPUs to increase capacity and capability. I don't see much (if any) benefit for the rest of us in their tools.

Then there is a more grassroots AI industry, working with small to mid-size text and code generators, or image/video generation/enhancement (with particular relevance to AI generated porn, which actually makes money). Everything built around open source tools and numerous open models.

I just put together a home server for roughly $1000US, with a 16 core CPU, 96Gb memory and a 16Gb AMD GPU. It took a lot of research to get things running properly in the GPU (quantization is everything). I'm impressed, though, particularly with the code generation capabilities of Qwen2.5-Coder. Not for generating entire systems, but as a coding assistant. The intents is generating short snippets of code at at time using the so-called Fill in The Middle (FITM) technique. The existing code is compressed into a set of parameters and passed as context to the inference engine along with a text prompt, the output is the existing code with the newly generated code at the point of insertion. Works quite well, quite amazing for someone who started off using TECO to write assembly code on 12 bit computers.

My meaningless opinion: Big AI will crash, as they can't make enough revenue to offset the costs of training and hardware quickly enough. The open source tools will form the basis of the next AI boom.

[link]

From: Jesús Flores (Jun 08 2025, at 22:55)

Hey Tim! I’ve been programming professionally in PHP for 15 years. That might not be a lifetime compared to some folks, but it’s definitely a lot—especially considering I’ve spent most of that time focused on the same core area: backend services, often in high-stakes environments and high-throughput systems.

ChatGPT—and now Codex integrated within it—has become an invaluable tool for me. I’ve adapted to it, I understand its limitations, and in a way, it feels like it understands mine too. When I’m working on new systems, Codex suggests improvements on the fly. Sometimes it's off-base, unrealistic, or just plain wrong—kind of like a real coworker. Not every suggestion hits the mark, and that’s okay.

But the sheer capability to work through a codebase, propose changes, fix errors, open PRs, write tests, and more? That alone is worth far more than the $20 a month it costs. No question about it.

[link]

From: Damon Hart-Davis (Jun 08 2025, at 23:39)

It is almost entirely the climate aspect that makes me recoil in horror, with the estimate of an 'enhanced' Google search response costing ~2 OOM more than a normal one, and almost always being at least partly wrong. I had to more or less stop using G until I found a way to disable the AI nonsense for my normal workflow. My day job/PhD is sustainability, and I can't be wasting energy like that!

(I have an ancient AI degree, I have shipped product(s) with something like AI in, I am not against AI in its various flavours. I am against bullshitting profligate LLMs being confused with oracles.)

[link]

From: Mark Carroll (Jun 09 2025, at 07:11)

I can imagine genAI helping education in providing students with an interactive anytime tutor who is tirelessly patient at answering their questions about things they're confused about, I've used it myself for things I was idly wondering about modern physics like quantum locality. For my software work, I haven't found genAI great at writing code but it's fine for instructive higher-level conversations about technologies I'm not very familiar with. However, for me its value is greatly harmed by that I can't entirely trust anything it says.

[link]

From: Simon Willison (Jun 09 2025, at 09:42)

"I can’t buy for a second that genAI is anything but toxic for anything education-related"

Having an always-available AI teaching assistant really is wildly useful for learning things, IF you can use it responsibly. But that IF is doing so much work!

It's also the world's most effective tool for cheating, harming educational outcomes and resulting in a total breakdown in how teachers can evaluate their students.

One of the most frustrating patterns in classroom-based learning is when you fail to understand one crucial detail and now the rest of the class is moving on without you. Deployed in the right way (and I do not know what that "right way" looks like) LLM-based digital teaching assistants should be able to solve that problem, which feels deeply non-toxic to me.

I already see this in learning-to-program contexts - new learners used to get derailed by a missing semicolon, LLMs have entirely solved that, which greatly reduces that initial learning curve and means that huge numbers of people are now getting started learning to program where previously they had fallen off the curve due to lack of direct support to help them overcome those frustrating early errors.

[link]

From: Rob (Jun 09 2025, at 10:30)

Those of us outside the tech world are also under a massive assault of AI marketing hype, with even less clarity around possible effective use-cases. Sadly our leadership and governance folks, and our customers, are totally unqualified to deal with it. There is a truly staggering amount of FOMO entailed, and we are regularly barraged with directives and queries about how we are incorporating all this wonderful tech before we are left behind, lying shamed and beaten in the dust.

Anyway, I'm not a tech guy, but I do know a bit about life sciences, and I do wonder where the incestuous coprophagy of the whole thing will lead, as AI eats and generates and then re-eats greater and greater proportions of the text floating around the web. At what point do we stop calling them "hallucinations" or even "lies," and instead understand it as the effects of kuru or BSE? Are there such things as AI "prions"?

The thing is, as an old Arts major, my primary academic training was in putting together coherent and effective bodies of text-- writing essays-- a task which seemed at the time, and I think still does, absolutely terrify your average STEM student. They often seem to think that the task of writing effective text is mysterious, esoteric, and onerous beyond measure; hence the thirst to automate it, even with a 3rd rate product.

It gets even worse with so much of the world living and working in ESL with massive insecurities about their English communication skills. (Aside; I really do wonder how the AI systems do in languages other than English, where the volume of text accessible to LLMs is significantly smaller...)

But the problem is that "AI" generated text is, over and above the hallucinations and lies, frankly, at best just Not Very Good, and at worst, wretchedly bad indeed.

Well, there are people out there that actually think McDonalds "fūd" is comestible; similarly there are folks who will be be satisfied with text-glop I guess. Its about to become a substantially lower quality information world tho. Its very sad that we will burn the world to produce it.

I believe someone once said something like "reality is a text-based application." If so, we are looking at a degraded future.

[link]

From: Colin Nicholls (Jun 09 2025, at 11:08)

I agree with all your points, Tim. One thing I haven't seen mentioned is in the inevitable consequences of being reliant on AI services in an unregulated industry. Once you are hooked on those coding tools, $20/mth will become $30, $60, etc. Those investors aren't going to be satisfied with "breaking even" which is still a long way away.

Oh, and those windowless buildings? They will probably make adequate incarceration points.

[link]

From: jb (Jun 09 2025, at 15:33)

https://www.baldurbjarnason.com/2025/trusting-your-own-judgement-on-ai/

[link]

From: Mark Levison (Jun 09 2025, at 17:03)

Tim - I think we're a long way off on many fronts.

LLMs are very very good at generating text in response to a prompt.

Their good at generating code, as long we don't mind duplication, an increase in technical debt etc.

GitClear has conducted a thorough analysis of data based on the users of their "Software Intelligence System." (https://www.gitclear.com/coding_on_copilot_data_shows_ais_downward_pressure_on_code_quality). This analysis was from 2023 vs prior years, so it is before the biggest growth period for LLM use. I expect this understates the problems we will see in 2024 and beyond.

They see: an increase in the volume of code added; an increase in Churn; an increase in Copy and Pasted Code; a Decrease in the amount of Code moved.

I suspect 2025 will destroy some companies under the technical debt.

Going faster in the wrong place is going to make many things more fragile. (My own blog post linked above shows some risks).

[link]

From: Hugh Fisher (Jun 09 2025, at 19:53)

Not too long ago science fiction concepts such as "cyberspace" "metaverse" and "singularity" became mainstream, or at least more widely understood.

Maybe it's time for another: "Butlerian Jihad" from the Dune books. No reason we can't have one now instead of waiting another fourteen thousand years.

(For the non-Dune readers, it's a religious crusade "thou shall not make a machine in the likeness of a humand mind" that smashes all the A systems, anything that might potentially be an AI system, and often anyone owning a thing that might be an AI.)

"The genie is out of the bottle" is an argument used as to why we can't ban AI. Yes we can. Slavery two hundred years ago was a worldwide institution that had been around for thousands of years. Now it's not. (Yes not totally abolished, but there are very few places on this planet where it's safe to admit you are a slave owner / trader.)

[link]

From: Tim (Jun 10 2025, at 12:01)

YCombinator discussion of this piece here: https://news.ycombinator.com/item?id=44222885

I think it’s instructive.

[link]

From: Gahlord (Jun 10 2025, at 13:41)

Data Center collapse is probably already underway: https://www.technologyreview.com/2025/03/26/1113802/china-ai-data-centers-unused

The reasons cited in the article feel pretty hand-wavey amid all the investor sweatiness.

[link]

From: Mark Bernstein (Sep 26 2025, at 19:47)

> I really don’t think there’s a coherent pro-genAI case to be made in the education context.

Current LLMs are absolutely superb at recommending books and articles on almost any topic. Want the best stuff on octopus cognition? Got it! Want the latest update on Nero’s rotating dining room? Got that too — though the papers are all in French and Italian.

DEEPL is remarkably good at a wide range of languages, including minority languages such as Ukrainian. Not entirely correct, but then Ivan in Wharton 306 might not be right all the time, either.

I’ve been writing a bunch of academic conference papers this summer, and LLMs have been really helpful.

[link]