Testing the T5120

This was going to be a Wide Finder Project progress report, but I ended up writing so much about the server that I’d better dedicate another fragment to the comparisons of all those implementations; especially since there are still lots more implementations to test. So this a hands-on report on a couple of more-or-less production T5120’s, the T2-based server that’s being announced today. Headlines: The chip is impressive but weird; astounding message-passing benchmark numbers; fighting the US DoD.

Logistics · I actually didn’t start the Wide Finder work because we were about to announce these servers, it was just an interesting idea one afternoon that’s kind of spiraled out of control. But when I realized the date was imminent, testing some of the code on the new box was a no-brainer.

Actually getting to use one was a problem. Without going into the details of Sun’s release process, the period after the server’s been validated and the first wave is coming out of manufacturing is pretty intense and weird. I don’t know if this is a forward-looking business statement, but it seems that all the ones that have been built have been sold; I know this because a certain number of servers that were supposed to be for internal use got diverted to customers.

For example, the one I was working on one afternoon last week, trying to get Erlang to act sane. I got a message out of the blue: “Hi Tim, this is Brian, you don’t know me. Can you be off that box in a half-hour? We have to wipe and re-install and ship it to the Department of Defense by tomorrow morning.” I was pissed, but customers obviously come first and fighting the US DoD is generally a bad idea.

I never saw any of these; they were just addresses like

sca12-3200a-40.sfbay.sun.com, equipped with Solaris 10, an

ssh server, and damn little else.

Logistics and I/O · I tested on two servers; the one they re-routed me too was apparently about the same as the one the United States Armed Forces snagged: 8 cores at 1.4GHz, 16G of RAM, with some pukey little 80G local partition mounted. I probably should have poked around and built a nice ZFS pool on the rest of that disk, but my Solaris-fu is weak and my time was short.

The reason I say that is that the filesystem performance was kind of

weird. If you look at the specs on these suckers, they ought to be I/O

monsters, and in fact the T1 servers are; but I suspect it’s really worthwhile

putting a little effort into getting things set up. On that little local

partition, a run of Bonnie with a 16G file showed that stdio

could fairly easily max out one core just reading data (getting excellent

rates, mind you). I mention this not because it’s indicative of the system

performance (this disk setup is obviously not typical of anything you’d put into

production) but it may well be relevant to the Wide Finder numbers.

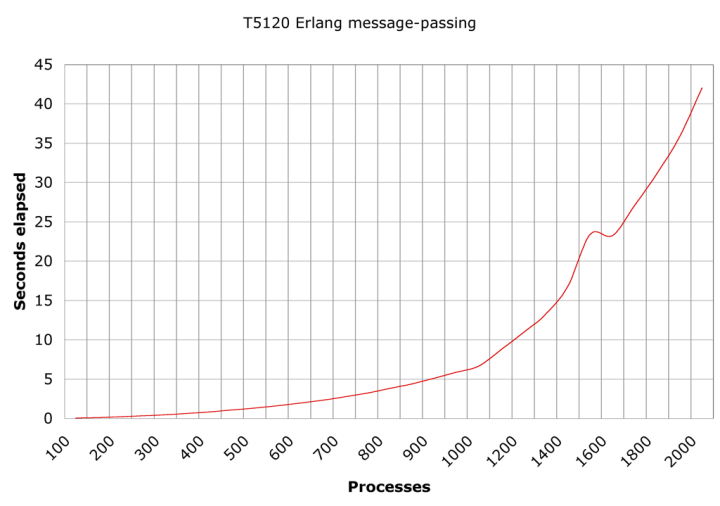

What It’s Good At · I ran the Erlang message-passing benchmark that Rob Sayre ran on the T2000. Check his graph, and compare this.

On the T1, the best time to run 1000 processes was just under 30 seconds; which took under 7 seconds on the T2. I wonder if there’s any computer of any description anywhere in the world that can beat this?

It’s Weird ·

Let me illustrate the weirdness with by showing a run of

Steve Vinoski’s

tbray5 Erlang code on a 971 Mb file with about four

million logfile entries:

~/n2/code/> time erl -noshell -smp -run tbray5 main 400 ../logs/mar.ap

463455 matches found

Delta runtime 2097520, wall clock 72939

real 1m13.346s

user 34m57.821s

sys 1m5.211sNow, a minute and a quarter or so elapsed is very good; in fact, the

fastest of any of the implementations I’ve tested so far. But the amount of

user CPU reported (the program, in milliseconds, and the time

output are consistent) is weird; showing 28 times as much CPU time as elapsed

time.

Well, this puppy has, eight cores, each with eight logical threads, so Solaris sees it as having 64 logical processors. While Steve’s code was running, the Unix command-line utilities were reporting maybe the CPU as 50% busy, i.e. 32 processors working, 32 idle, so that is kind of consistent with 28 times as much CPU as elapsed. But hold on a dag-blaggin’ second, there are really only eight cores, right? So I went sniffing around among various hardware and Solaris experts, and here’s what’s going on.

First of all, each of the eight cores has two integer threads; so it’s kind of like having 8 dual-core cores (yes, I know that sounds absurd). So reporting a CPU time of 16 times the clock time might be plausible; but not 28.

Further poking dug up the answer: it seems that the hardware doesn’t tell the OS how it’s sharing out the cycles among the the threads that it has runnable at any point in time. So Solaris just credits them with user CPU time whenever they’re in Run state. The results will be correct when you have up to sixteen threads staying runnable; above that they get funky. I gather the T1 had this peculiarity too.

This is not a big deal once you understand what’s going on, but it sure had me puzzled for a while. And holy cow, can you ever grind through a monster integer computing workload with this puppy.

I’ll stop here, and come back with a bunch of reports on all those Wide Finder implementations. Assuming nobody steals my server in the meantime.

Comment feed for ongoing:

From: Paul Brown (Oct 09 2007, at 13:12)

Just a bare -smp may or may not have the effect that you want, since it lets the emulator choose the number of schedulers that it thinks will be best. You might also try varying the number of schedulers (with +S) and see how that impacts performance. Depending on which kind of thread the emulator is using and how the OS maps threads to cores, the naive number of scheduler threads may not be the most performant setting.

[link]