I’ve been working on some ideas for clean-screen apps; instead of controlling them with the touch screen, you wave your device around or tap it or shake it. To do this, I’ve been learning about the output of the sensors you find on Android devices. I’ve found that the documentation, while complete, contains some scary-looking math and assumes you know more about quaternions and rotation vectors than the average developer. Well, more than I do.

So I created a little app called “Sensplore” which captures sensor data, dresses it up in CSV (spreadsheet) format, and emails it to you. It’s Apache2-licensed on Google code; for those who just want to run it, go hit Google Play Apps.

It took me a couple of tries, a few visits to StackOverflow.com, and input from Android engineering, to get this right; for example, the graphs I recently published in Sensor Kinetics Pictures were, well, wrong. I’ve found it really quite helpful in figuring out what the sensors do. It’s only available on Gingerbread-or-higher devices.

What it does · When you run the app, it fills the screen with a button saying “Go!” Once you press that, you can shake or tap or wave the device as much as you want, then hit the button again, which will have changed to read “Done!”

When you’re done, Sensplore writes out the CSV and fires an intent with ACTION_SEND, which shows you a big list of candidates to do the sending; if you pick Gmail, it shows up as an email from you to you with the subject filled in and the CSV data as an attachment, so you can download it, fire up your favorite spreadsheet program, and get it to draw graphs of what the sensor data is showing you.

[Digression: This made me feel a little bit silly; I’d been toying with all sorts of ideas for getting the data off the device, including RESTful back-ends and the like. The idea of just emailing seemed impossibly klunky at first blush, but in practice it works pretty well; it brings up the Gmail app and I found myself tapping in a few reminder notes to myself before hitting “Send”.]

I suspect that someone who really understands app scripting could set up their favorite spreadsheet to draw the graphs automatically; I end up selecting ranges and asking for graphs, which feels a bit laborious.

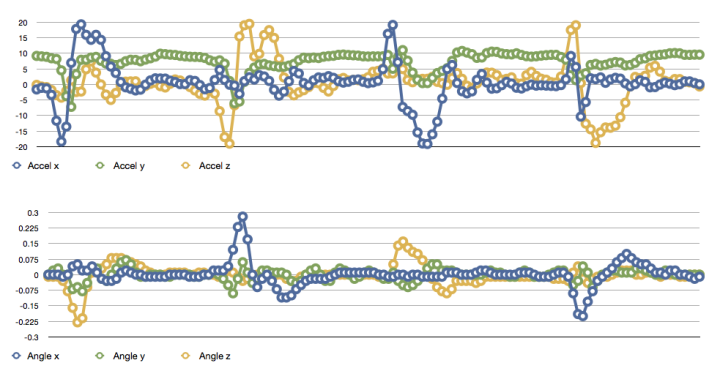

What it captures · Sensplore generates CSV containing two output sets, each with four columns of numbers. Each set has a time (msec) column and three floating-point x, y, and z values.

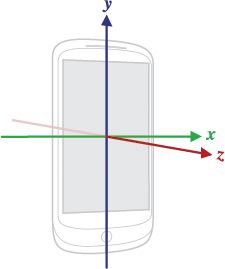

The first group is the raw data you get from a Sensor.TYPE_ACCELEROMETER sensor, which to say acceleration along the x, y, and z axes in the device space; the axes are illustrated in this image from the SensorEvent docs.

You have to be careful with this data; “acceleration” may not mean what you think it does. I’ll probably have more to say later about best practices for using this stuff, but a look at the data is instructive.

The second group represents successive changes in rotation angle around the x, y, and z axes. It’s not measured directly; we take the samples from the Sensor.TYPE_ROTATION_VECTOR sensor, turn them into rotation matrices using SensorManager.getRotationMatrixFromVector(), then extract changes from successive pairs of matrices using SensorManager.getAngleChange(). Once again, these are in device not world co-ordinates.

The code for doing this is easy to get wrong, so if you’re going to want data like this, stealing a few lines from Sensplore might be a good idea.

What you see is what you get · Here are two graphs, produced by Sensplore and iWork’s “Numbers” spreadsheet, showing what happens when I hold my Galaxy Nexus upright facing me and make chopping motions to the left, back, right, and forward, returning to vertical after each chop. The first graph is the accelerometer data, the second the angle changes.

Caveats · I’m not claiming that these are the only interesting sensors. I’m not claiming that the data they produce, or the way that Sensplore processes it, will meet your needs. This isn’t an official Google tool, and may not get any more attention from me; on the other hand, I may use it as a workbench to to explore other sensors and how to analyze their data.

Having said all that, programming up against the analog-to-digital coalface is a new experience for many of us, and anything that helps understand the raw data might prove useful to a few. After all, this is one area in which mobile-device software is profoundly different; waving ordinary computers around in the air is rarely useful.